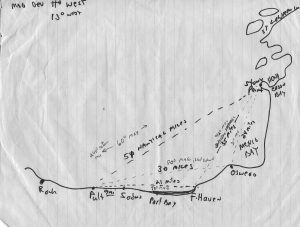

Many years ago I was fortunate to have two friends who each owned a wooden sailboat. One was a 31-foot Norwegian Knarr made from African mahogany and the other was a 28-foot sloop. To earn a place on the crew I pitched in with all the work in maintaining those boats during the winter. Since one of us always had to work and by the time we picked up supplies and drove out to the yacht club we often left port towards sunset. Night sailing can be fun but one really needs to rely upon one’s navigation skills using a chart, compass and reliance at times on dead reckoning to make sure that in the middle of night we safely pass by a marked shoal and not straight into one. To plot the course, several variables (for ex. magnetic variation )(1) were added to the calculations. If one of the variables is not factored in correctly, we could run into an unwanted surprise. In much the same way consideration should be given to developing and applying a cybersecurity policy. I have written before in this blog about the importance of answering the 3 security questions and of understanding peculiar differences between IT and OT cybersecurity. Failure to accommodate for these differences will likely lead to a poor cybersecurity policy which if followed could lead the institution to the shoals. When I read the recently issued NIST Special Publication 800-184 “Guide for Cybersecurity Event Recovery”(2) , I find myself wondering whether a new example of this failure has appeared.

In the first paragraph of this Guide intended for policy/decision makers one runs into a curious statement that seems to imply one of the assumptions behind this document:

“There has been widespread recognition that some of these cybersecurity (cyber) events cannot be stopped and solely focusing on preventing cyber events from occurring is a flawed approach.” (3)

What is assumed here? What kind of cyber threat is thought to be no longer stoppable? Are the authors thinking about a state resourced APT that will not stop until the objective is achieved regardless of what the victim puts up for defense? A “resistance is futile” condition to borrow a phrase from Star Trek? Or are we talking about a cybercrime or hacktivist threat? Since the threat is not clearly identified it is hard to evaluate the weight behind the assumptions made by the authors of the Guide.

From the beginning, we see that the possibility to take steps to prevent damage from a cyber incident or intentional attack before it happens is downgraded in the favor of preparing a recovery plan or “playbook” to apply after something unpredictable has happened. This at first may appear to be sensible, yet in the examples provided many of the post cyber event recovery lessons learned are applicable to prevention. In the example given of a “Data Breach Cyber Event Recovery Scenario” belonging to one of the later recovery actions of “Strategic Recovery”, long term goals are proposed for “implementing stronger authentication mechanisms, isolating administrative processes from general productivity activities, implementing least privilege principle, using encryption to protect customer’s data, and deploying better monitoring capabilities” (4). The reader may ask why do these already credible policies need to be learned after suffering an incident. Shouldn’t they already have been applied in the prevention phase of the recovery cycle before an incident happens?

One other of several queer points made in this Guide is the language devoted to the importance of communications. Yes, one can at first say “spot on” but when one reads further one again begins to wonder if something important is being missed. In a section titled “Recovery Communications” we are told that this “includes non-technical aspects of resilience such as management of public relations issues (bold type is mine) and organizational reputation” (5). One of the motivations behind this document seems to be in responding to the several publicized and embarrassing data breaches in the IT systems of Government institutions. The public relations aspects after a publicized data breach are important but other aspects of communications need to be addressed as well. Not appreciated are the importance of reliable communications systems and links used in the operations of IT systems, especially with control systems. The importance of communications in the remote monitoring and management of industrial control systems that support critical infrastructure such as power grids is not recognized here.

The cyber-attack that was executed against the control systems of power grid operators of Ukraine in December of 2015 also targeted the communications systems. The Serial to Ethernet modems used to link the SCADA systems with the remote substations were disabled by unauthorized firmware installed on the devices (6). In terms of a Guide dedicated to developing recovery plans in the event of a cyber incident the lack of language on the non-PR side of communications is a serious flaw.

One can perhaps argue that this guide applies to IT and not OT systems. This is perhaps a convincing argument to those that like to compartmentalize useful information into categories. However, industrial control systems are briefly mentioned in this document, albeit inside a subcategory box in Annex B. (7) Why then not develop the control system topic and add more OT reference documents (in addition to the single reference of NIST Framework for Improving Critical Infrastructure Cybersecurity) and include them in Appendix D – References (8). These control systems also make extensive use of IT and are highly dependent upon IT for their functions. A major section that should be here is missing. Perhaps the authors think that if this is important it will naturally surface in the post recovery lessons-learned phase. This kind of thinking will not be of much usefulness in a night sail and does not offer much for an operator of critical infrastructure. If one takes this as the main word on navigating in the area of cybersecurity he or she can likely expect some unpleasant sailing ahead.

References:

1 https://www.ngdc.noaa.gov/geomag/declination.shtml

2 http://nvlpubs.nist.gov/nistpubs/SpecialPublications/NIST.SP.800-184.pdf

3 Ibid., page vi Executive Summary

4. Ibid., page 28.

5. Ibid. pages 12-12

6. https://ics-cert.us-cert.gov/alerts/IR-ALERT-H-16-056-01

7. ANNEX B titled “CSF Core Components and SP 800-53r4 Controls Supporting Recovery “ under listed function of Protection. http://nvlpubs.nist.gov/nistpubs/SpecialPublications/NIST.SP.800-184.pdf

8. Ibid., page 44.