I remember participating in a work group composed of national representatives tasked with coming up with norms for confidence and security building measures (CSBM) for states to follow in cyberspace. This was quite exciting to be a part of at first, but the discussions slowed down when a representative of a cyber-superpower raised the issue of definitions. He said that before proceeding we need to agree on the terms used. This looked like a stalling tactic (in retrospect, it probably was) to keep our group from coming up with concrete proposals. Nothing much came of this work, but I later came to appreciate what that delegate was saying. In developing policies to protect critical infrastructure we need to make sure we match the policy for the object we have identified for protection. Otherwise we may miss the objective and waste a lot of time in the long run.

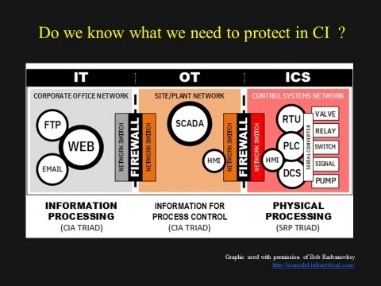

Three terms often used in discussing Critical Infrastructure Protection (CIP) are Information Technology[1] (IT) Operation Technology (OT) and Industrial Control Systems (ICS). IT was the comfort zone where I worked in for nearly 30 years. This is the technology space most policy makers are familiar with for it sits on their desks in the office and study rooms. Very few of these policy makers have seen a PLC nor do they work with them at home or know where they are placed in an industrial operation. The security priorities are often described in terms of confidentiality,integrity and availability or CIA for short. Access to the data or information must be controlled, it must be presented and/or reproduced accurately and retrievable when needed. Devices are networked and there is access to the outside world a.k.a. the Internet where there is more connectivity and interaction going on. In an industrial enterprise this is where the administration, billing, accounting and dispatching (of orders) live.

The next term that comes up is Operational Technology (OT) which has to do with the activities going on outside the administration or office IT part of the enterprise. This is the part of an industrial operation where IT hardware and software (for example SCADA) are used to monitor and control a physical process taking place at another location. The physical process being monitored could be petrochemical as in an oil refinery, water treatment for a community’s drinking water, pumping fuel or compressing gas down a pipeline, routing trains down a railroad, or the generation and distribution of electricity in a power grid. OT is found in a control room where IT is also present, but it is not primarily being used for email and billing, instead it is monitoring the electronic devices located closest to where a physical process is taking place. This monitoring in the control room is done using a human machine interface (HMI) which presents what the devices are saying about the physical process in human understandable form on a screen.

Then we come to industrial control systems (ICS). This is where the devices that directly monitor and control a physical process are located (pumps, valves, sensors, actuators, PLC’s). This is also where I see a lot of confusion and mixing of terms. One characteristic of ICS is that they are autonomous, especially PLC’s and Safety Instrumented Systems (SIS). Meaning they have all they need to perform the activities they are assigned (programmed) to perform. The PLC for example is programmed to monitor and react to basic or normally expected changes in the physical process. Some of these devices are not on an IP network and do not need the IT and OT layers above them to perform their functions. That is why they are autonomous. So what this means is that if the enterprises’ IT and OT go down (if you have a Windows computer you know what that is like) the physical process being monitored and controlled by the ICS is still going to do something. A good example of an operator’s (working in the control room or OT side) loss of view and control of a critical physical process is the Bellingham, Washington gasoline pipeline explosion which was caused in part by a slow and unresponsive SCADA[2].

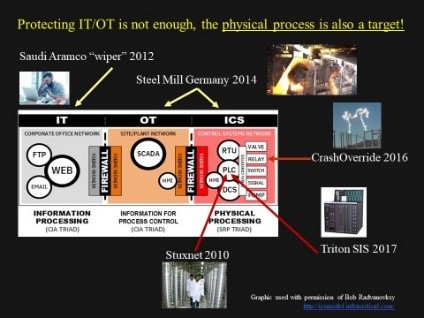

Understanding what IT, OT and ICS are and what they do is vital to the development of cybersecurity polices for CIP. Not getting them straight will lead to working under false assumptions and in turn, bad policy. I could see a sign of this when I listened to a government’s presentation about national cyber security policy. The official responsible for cybersecurity policy spoke about protecting critical infrastructure including “SCADA” (Supervisory Control and Data Acquisition) system. According to his description one could conclude that the protection measures extended only to the OT part of critical infrastructure. The ICS closest to the physical process was left alone. Possibly under the mistaken assumption that this is automatically being covered in the IT/OT biased security policy. This is a dangerous body of water to be swimming in for incidents also take place in the ICS space and is a new target for attacks.

This confusion in terms show up elsewhere. In a recent discussion on SCADASEC over new NIST proposal for “Zero TRUST Architecture”[3] a question was raised about ZTA’s applicability to industrial environments. In NIST’s definition of ZTA an interesting assumption is explained to the reader:

“Zero trust (ZT) provides a collection of concepts and ideas designed to minimize uncertainty in enforcing accurate, least privilege per-request access decisions in information systems and services in the face of a network viewed as compromised” (page 4).

One can see something useful here for the IT part on an enterprise’s operations but wonder about the applicability to ICS environment. How could “zero trust” be applied in an environment where TRUST (in sensors, telemetry) is a critical element to the view and control of physical processes? One where processes are conducted in real-time with low tolerance for latency. When a process goes beyond pre-assigned set points protective measures are executed to save property and lives. One can imagine the difficulties and challenges placed on engineers if that well-meaning security policy applicable for IT systems is made mandatory for industrial control systems. Adding security to existing intelligent electronic devices (IED’s) may mistakenly assume that the device has the resources to accommodate the additional computations required. In an ISA 62443 workgroup I participated in one colleague noted that adding security to a current devices caused it to slow down and could not perform its assigned task.

I also notice an innocent mixing of IT/OT/ICS terminology in the presentations made by security vendors who are making noble efforts to address gaps in training and awareness. I found this webinar invitation in my inbox with the title “Building and Retaining an ICS Cybersecurity Workforce”[4]. It was organized by two leading cybersecurity companies working in CIP. Here is a telling quote from the invitation:

“Many industrial organizations face challenges training and developing their IT/OT staff to defend against increasing cyber threats. There is a worldwide cybersecurity skills shortage, and as the workforce gap continues to grow, it is putting organizations at higher risk.”

I ask myself “what about ICS, where did that go?” Instead it seems that the most important part for securing an industrial operation (where the physical process is found) is being missed completely. The emphasis on addressing the skills shortage mentioned stops apparently at the HMI in the control room located far from those working intimately with the devices closest to the physical process.

This lack of clarity in terms raises the issue of whether those who talk about critical infrastructure protection know enough about the “what to protect” in order to provide competent advice on “how to protect”. I see some ambiguity in the terms used to describe industrial operations and would prefer clear distinctions based on the realities (physics) of industrial operations.In the scramble by IT network centric cybersecurity companies to gain reputation and increase market share they should perhaps reconsider what they are proposing and the consequences of selling a security policy that does not address what is most relevant to protecting industrial environments, the ICS side. Many engineering professionals in industry perhaps know intuitively and can distinguish IT, OT from ICS. However the one’s who make the policy which the industry professional may have to implement may not. There is much wisdom to be drawn here from the children’s story of the 3 Little Pigs. Only one of them answered the questions of what needs to be protected?, from what threats? and came up with a solution (the how?) that was appropriate for the environment where the 3 pigs lived.

Xxxxxxxxxxxxxxxxxxxxxx

Postscript: There was some reaction and comment to the above blog entry on the SCADASEC mailing list and as an “epilogue” I decided as a way to finish the thought to attach my reply below:

[ “I hear you but I am coming from the other end of the problem and believe me I have tried the techniques you advised.

* In 2011 while a member of an OSCE working group tasked with coming up with confidence and security building measures (CSBM) for states to follow in cyberspace I pointed out the implications of Stuxnet for critical

infrastructure and the need to introduce measures that would help manage

this new threat to the C.I of other nations. This proposal was met with the

displeasure from a colleague representing one of the cyber superpowers.

* Later I worked on national cybersecurity strategy and when the

discussions focused on protecting ministry websites and the computers in

peoples homes I pointed out the importance of protecting industrial

processes involved with providing electricity to those ministry websites and

pcs. I can still remember eyes glazing over after I spoke.

* As a member of task force preparing a draft law on cybersecurity. I

tried to point out the ambiguity risk of using the term “Critical

Information Infrastructure”. I pointed out that this term is subject to

interpretation which can confuse practical application of the law in the

industrial sector where the remote management and control of a critical

physical process, not “information” is a priority (later we heard from a

utility operator say they are not a part of CII and the law does not apply

to them). One IT security colleague responded by saying “what the hell are

you talking about protecting process control!” The term stayed in place

with the understanding that CII includes power grid, water, and transport

control systems which in practice still kept focused on the Office IT of the

utility and not so much on the plant floor.

* I was part of an exercise development teams for national and

international cybersecurity exercises where cyber attacks on critical

infrastructure were included in the scenario. In one case while developing

a scenario for a power blackout for a nation’s capital my repeated proposals

(over 3 years) to invite representatives from the power utility (those who

could help us construct a realistic scenario to allow for maximum lessons

learned) were rejected.

In over 10 years of participating in meetings and working groups while there were many diplomats, IT security specialists or IT policy oriented officials THERE NEVER WAS AN ENGINEER nor a PROTECTION ENGINEER IN THE ROOM. In none of the meetings I attended where critical infrastructure protection was the topic did anyone mention the standards you listed. To policy making people beyond recognizing ISO 27000 they do not exist. However I do not fail to mention relevant standards to control systems such as ISA 62443 and others (IEC 61508 Electrical, electronic/programmable safety systems, IEC 62280 Railway security systems, API 1164 Oil and Natural Gas Security, NIST 800-82 Guide to ICS Security, etc. ) in my work:

In the visits I have made to asset owners in critical infrastructure sectors

I was usually met by the CISO who took me on a tour of the sever room. He

was somewhat taken aback when I asked to visit the engineers in the control room who manage the pumping stations, compressors and other industrial equipment. The response which I heard more than once was that “sure we can arrange a meeting BUT I HAVE NEVER MET THEM BEFORE”.

My visits included a funny surprise after the meetings were arranged with the participation of IT and Engineers. It was remarkable to see the interaction of the two groups. I was even thanked sometimes by my hosts for bringing them together in one room for the first time. Before that they mostly used the phone to collaborate.

In my experience I have learned that there is a dangerous IT bias in making

policy about C.I. With all due respect to the engineers who have the

“thousands of years of experience” and do get the difference between IT and ICS their voice is not coming across. Instead I see IT security policy

being applied in industrial control systems. I have mentioned this in

SCADASEC before in regard to institutions issuing cybersecurity frameworks and guides. Instead IMO the SANS 20 and ISO 27000 dominate this narrative and is of little help to those trying to improve the safety, reliability and efficiency of industrial operations that involve monitoring and managing a physical process.

This is why in my presentations to mostly IT oriented audiences I make a

point of introducing them to what the other side of the moon is like in the

world of power generation and distribution, water supply, transportation and fuel pipeline operations. The title of the presentation is “The

Cybersecurity Dimension of Critical [x] Infrastructure”. I explain that the

X stands for the C.I. one is focusing on. In this sector it is important to

know the difference between IT, OT and ICS and I give examples where

misunderstanding their significance has led to accidents and to exploitation

of unprotected systems by malicious actors. Policy makers need to know this.

Energy operations, water, transportation you name it all use Industrial

Automation and Control Systems (IACS) which by the way is a well defined and clear term used throughout ISA 62443 standards documents.

Here is a thought why not drop OT/ICS altogether and use IACS as defined by ISA 62443:

“industrial automation and control system (IACS)

collection of personnel, hardware, software, procedures and policies

involved in the operation of the industrial process and that can affect or

influence its safe, secure and reliable operation

Note to entry: These systems include, but are not limited to:

1. industrial control systems, including distributed control systems

(DCSs), programmable logic controllers (PLCs), remote terminal units (RTUs), intelligent electronic devices, supervisory control and data acquisition (SCADA), networked electronic sensing and control, and monitoring and diagnostic systems. (In this context, process control systems include basic process control system and safety-instrumented system [SIS] functions, whether they are physically separate or integrated.)

2. associated information systems such as advanced or multivariable

control, online optimizers, dedicated equipment monitors, graphical

interfaces, process historians, manufacturing execution systems, and plant

information management systems.

3. associated internal, human, network, or machine interfaces used to

provide control, safety, and manufacturing operations functionality to

continuous, batch, discrete, and other processes.”

I like this definition for it clearly takes one out of the Office IT

environment and to the control room and equipment in the field closest to the physical process. We are talking about a different place with different priorities and ways of doing things. You [engineers] know this but many others with influence do not. If this usage is more widely applied and accepted by policy makers than this will make things easier for me and for other non-engineers working in the industry. One would hope that a better informed policy maker would make the job easier for the engineers.

How does using IT and IACS without the “OT” sound?

Sorry for the lengthy reply and “rant”.

Respectfully,

Vytautas (Vytas) Butrimas

Opinions and views are the authors and do not represent the official view of any institution he is associated with.:]

[1] Especially true when as in Lithuania and other countries the term Critical Information Infrastructure (CII) is used. In those cases the Office IT, pc’s, servers, e-mail and web sites seem to be the main focus even though some may incorrectly think it also (assuming) covers the industrial OT/ICS sides.

[2] https://www.ntsb.gov/investigations/AccidentReports/Reports/PAR0202.pdf

[3] https://nvlpubs.nist.gov/nistpubs/SpecialPublications/NIST.SP.800-207.pdf

[4] https://hub.dragos.com/building-and-retaining-an-ics-cybersecurity-workforce?utm_campaign=Q220%20-%2007%2F22-20%20-%20Webinar%20-%20Building%20and%20Retaining%20an%20ICS%20Cybersecurity%20Workforce&utm_medium=email&_hsmi=91676441&_hsenc=p2ANqtz-9rl7iVRMvyb2LXiwvseXE6CKiHF5zve1JUUYxXpHoZLVRY9rKKoWeRxn2HsE2pdZ5sv5GU9KN5j4HVfgTRXq7rQ8cV0A&utm_content=91670157&utm_source=hs_email